Intelligent Computation Paradigm

The basis for Non-probabilistic AI

What is meant by Intelligent Computation

We consider any AI system — whether it involves audio, face recognition, text understanding, or semantic database queries — as a special, intelligent type of computation. We place it alongside classical, quantum, probabilistic computations.

In contrast to those, however, intelligent computations lack axiomatics; they do not yet have primitive building blocks or composition operations.

For example, in quantum computation there are universal quantum gates and universal ways to compose them so that any computation can be expressed through them. This allows universal quantum machines. The same applies to classical computation: there are bitwise operations and control-flow operations that allow any computation or algorithm to be expressed through them.

This is not the case for intelligent computation. There is no insight into what the elementary intelligent operation is. Unfortunately, the mainstream has even taken the opposite route — scaling up and training large black boxes. Problems with safety, reliability, and interpretability are all problems of the black box, not problems of intelligence itself.

There is a commonly known term Computational Intelligence. It is not meant here. There are step-by-step, logical-reasoning algorithms that traverse decision trees/graphs. They are not meant here. The focus here is on the cognitive part of intelligence — the computation that “feels” the context. The other mentioned stepwise search methodologies are complementary.

Discovering universal and composable primitives of intelligent computation is what will unlock non-probabilistic AI.

Breaking the Deep-Thinking Loop

Let’s now consider deep thinking or the chain-of-thought approach. A large language model is queried multiple times: instead of a one-shot call, it is used repeatedly, advancing itself through a reasoning plan or revising its own outputs for corrections.

You can think of it as a large, heavy computation performed recursively or iteratively. Some criteria determine when those iterations stop. Benchmarks have shown that this process can extract more intelligence from LLM systems.

But what do these iterations actually bring? It’s often said that more computation leads to better accuracy. But does the amount of computation itself produce more intelligence? No. So what problem do those extra iterations actually solve? They organize the top-down flow of information.

Because we are searching for the smallest elementary building blocks, let’s assume we split this huge loop into one hundred small loops. We preserve the overall amount of computation but reshape it into many smaller loops, allowing them to be composed in various ways. Each small loop then becomes responsible for a primitive intelligent operation.

Smallest Intelligent Computation

Context awareness is essential. The ability to identify and handle context and its parts is intelligence. Therefore, these small, local iterations must be linked to the process of identifying context and its pieces.

The next step is to determine what these iterations are for — what context identification actually requires. Context and its constituents are related like the chicken and the egg. The dialectical question arises: what comes first? We don’t know what the pieces are until we form the context and verify that the chosen pieces fit nicely; yet we also cannot form the context without the pieces that compose it.

This is a manifestation of the fact that there are bottom-up and top-down flows of information and they need to be in an agreement. They define pieces and context — two layers. The dialectical mutual definition of these two layers can be expressed mathematically as an iterative optimization process that must be run at inference time to simultaneously identify the context and its components. When the human mind successfully identifies them, it is called an “aha moment”. This is the essence of intelligent computation and the first primitive we are looking for.

Result of Primitive Intelligent Computation

The final step is to understand the type of solution we are searching for during the optimization. Is it a number? A vector?

Context and its pieces form relationships. Understanding context means identifying all these relations. Therefore, we are solving for a system, for a structure — not for a number or a vector.

Intelligent Software

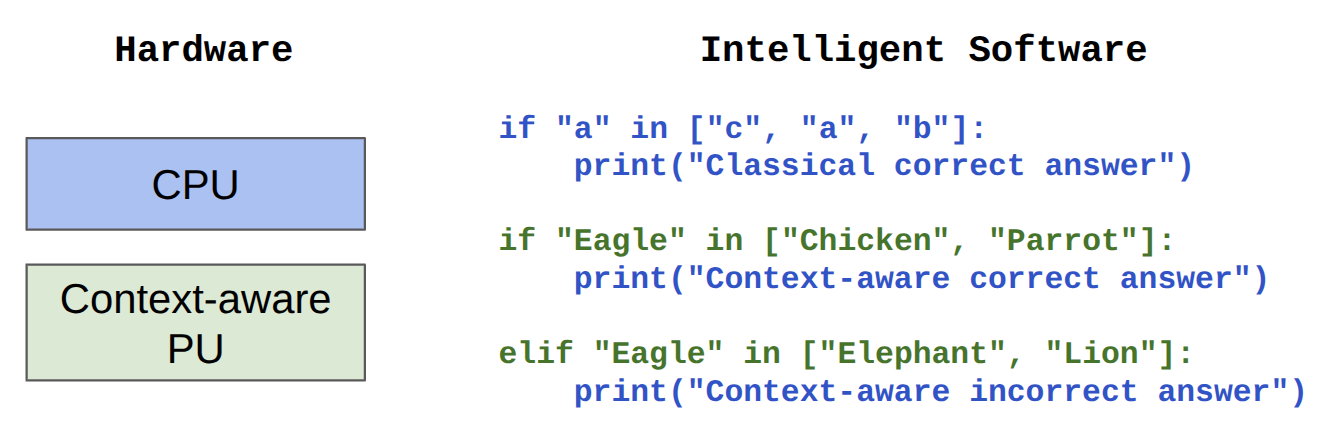

Once elementary building blocks and composition rules exist, we can talk about writing programs. Programming languages should get native support for intelligent operations. Then, complex compositions of primitive intelligent operations could be constructed to analyze or search for meaning within signals.

Executing an intelligent operation, as discussed above, involves running optimization iterations to search for a structure. In executing an intelligent program, this becomes the search for compositions of structures, possibly sharing their components in intricate ways.

Intelligent operations can be seamlessly integrated and interleaved with standard processing steps in the same source code. Such programs would be aware of contexts present both in the input data and in their own source code. These are intelligent programs.

Programming languages are extremely flexible. The space of possible programs — and thus possible intelligent programs — is almost infinite. The possibilities go far beyond what trained neural networks can achieve. Pattern-matching and memory-based intelligence can be replaced with executional intelligence.

At the same time, software source code is transparent. Just as one can estimate execution time, memory consumption, or algorithmic complexity by inspecting nested “for” loops or “if-else” statements, it would be possible to see and control the intelligence in the code.

For example: chaining three contexts with five constituents each may result in level X of intelligence, while composing them into a larger context may yield level Y. Understanding common-sense speech at a five-year-old level could require three nested levels of contexts, and so on.

Intelligent capabilities thus become transparent, interpretable, reliable, and safe.

Black boxes can be replaced with software.

What about neural nets?

Neural networks are function approximators. They can continue to play that role. However, the “intelligence” attribute can be achieved in a more direct and flexible way.

For example, one could train a neural network to take the coefficients of a quadratic equation as input and output its roots. But it is also possible to compute the solutions directly — via explicit formulas or iterative optimization. Both approaches have pros and cons, and hybrid strategies are possible.

In AI, neural network black boxes follow the first approach because of a lack of insight into what is the direct computation that embodies intelligence. The Intelligent Computation Paradigm described here points to the other way. While neural nets pattern-match the solution to context identification equations, it is possible to directly solve for the structures holding the context. With exactly similar pros and cons and strategies to combine the two approaches.

What about perception?

Human perception across various modalities (audio, visual, etc.) is the same process of identifying context and its pieces, only operating on a different time scale. It is much more common to talk about phoneme recognition—but what is a “phoneme”? It is the identified system of relations between the context and its pieces.

Note

Silent AI’s central concept is Integrity. It has many names depending on the field. The most recognizable name is “Context”, which is used throughout this post for readability.

The word Context should be replaced with Integrity, because the underlying thinking applies across all domains.

© 2025 Oleksandr Korostylov (Silent AI). Reproduction or derivative use without attribution is prohibited.